| Exam Name: | Administering Relational Databases on Microsoft Azure | ||

| Exam Code: | DP-300 Dumps | ||

| Vendor: | Microsoft | Certification: | Microsoft Certified: Azure Database Administrator Associate |

| Questions: | 341 Q&A's | Shared By: | kaia |

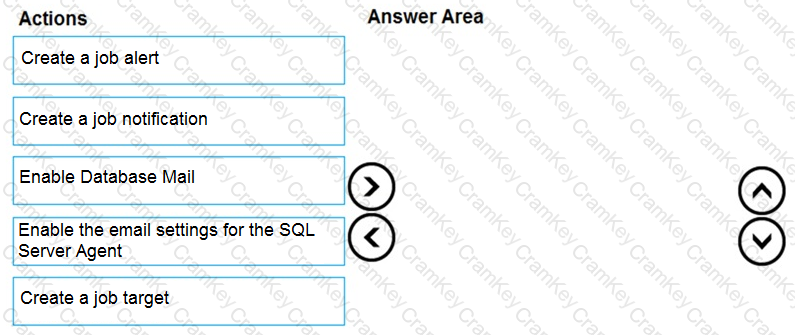

You have SQL Server on an Azure virtual machine named SQL1.

SQL1 has an agent job to back up all databases.

You add a user named dbadmin1 as a SQL Server Agent operator.

You need to ensure that dbadmin1 receives an email alert if a job fails.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

You have 40 Azure SQL databases, each for a different customer. All the databases reside on the same Azure SQL Database server.

You need to ensure that each customer can only connect to and access their respective database.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

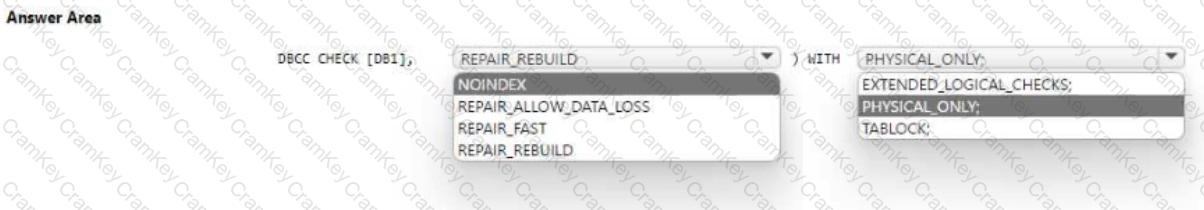

You have a SQL Server on Azure Virtual Machines instance that hosts a 10-TB SQL database named DB1.

You need to identify and repair any physical or logical corruption in DB1. The solution must meet the following requirements:

• Minimize how long it takes to complete the procedure.

• Minimize data loss.

How should you complete the command? To answer, select the appropriate options in the answer area NOTE: Each correct selection is worth one point.

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Data Lake Storage account that contains a staging zone.

You need to design a daily process to ingest incremental data from the staging zone, transform the data by executing an R script, and then insert the transformed data into a data warehouse in Azure Synapse Analytics.

Solution: You use an Azure Data Factory schedule trigger to execute a pipeline that executes an Azure Databricks notebook, and then inserts the data into the data warehouse.

Does this meet the goal?