| Exam Name: | Designing and Implementing a Data Science Solution on Azure | ||

| Exam Code: | DP-100 Dumps | ||

| Vendor: | Microsoft | Certification: | Microsoft Azure |

| Questions: | 460 Q&A's | Shared By: | coby |

You use Azure Machine Learning to deploy a model as a real-time web service.

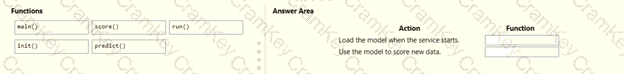

You need to create an entry script for the service that ensures that the model is loaded when the service starts and is used to score new data as it is received.

Which functions should you include in the script? To answer, drag the appropriate functions to the correct actions. Each function may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content

NOTE: Each correct selection is worth one point.

You manage an Azure Machine Learning workspace named Workspace1.

You plan to create a pipeline in the Azure Machine Learning Studio designer. The pipeline must include a custom component You need to ensure the custom component can be used in the pipeline. What should you do first.

You are developing a machine learning model.

You must inference the machine learning model for testing.

You need to use a minimal cost compute target

Which two compute targets should you use? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You plan to use a Python script to run an Azure Machine Learning experiment. The script creates a reference to the experiment run context, loads data from a file, identifies the set of unique values for the label column, and completes the experiment run:

from azureml.core import Run

import pandas as pd

run = Run.get_context()

data = pd.read_csv('data.csv')

label_vals = data['label'].unique()

# Add code to record metrics here

run.complete()

The experiment must record the unique labels in the data as metrics for the run that can be reviewed later.

You must add code to the script to record the unique label values as run metrics at the point indicated by the comment.

Solution: Replace the comment with the following code:

for label_val in label_vals:

run.log('Label Values', label_val)

Does the solution meet the goal?